Feb 2014

First realtime mobile 3D scanner

This product was a fully mobile device (box) that could do real-time 3D scanning totally on the device. No cloud or offline processing.

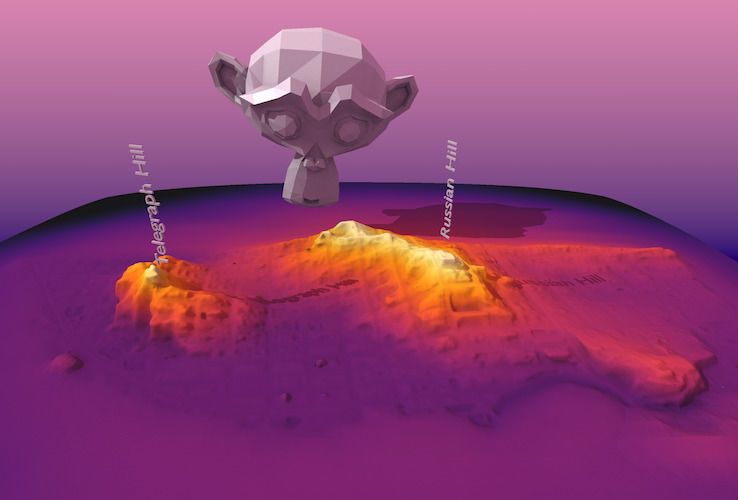

Back at UT I joined a research group called the UT Perception lab. There we worked on realtime 3D reconstruction techniques using GPU processing. I helped implement Kinect Fusion in CUDA, a CUDA raytracer for scanning volume visualizaiton and other 3D reconstruction techniques using heightmaps on manifolds.

I also designed all the hardware and handled sourcing motherboards, screens, controls, and manufacturing the case.

Our team won international awards and presented our work at industry conferences:

- Nvidia GTC

- General Motors Innovation Day

- SBIR Innovation Summit

- Idea To Product Austin Winners

We went on to get additional NSF SBIR phase II funding to continue 3D scanning research.

I worked on some cool projects during this time including:

HD Texture mapping on 3D scans

To improve visual quality of 3D scans, textures are just as important as the geometry (if not more). The VGA camera built in the Microsoft Kinect is not suitable for texture mapping, so we connected a HD webcam to the sensor. I created and implemented a novel image alignment technique and used state-of-the-art texture blending techniques.

With standard per-vertex color:

With HD texturing:

3D Sensor (Structured Light) Calibration

Accuracy of our 3D scans was was Lynx Labs primary competitive advantage, after the speed and simple interface. To get globally consistent room-scale models we improved upon the standard calibration of the Structure Sensor and implemented a custom stereo vision pipeline.

Reconstruction using factory calibration :

Reconstruction using custom calibration and stereo vision pipeline :

Reconstruction using custom calibration and stereo vision pipeline :