Dec 2017

Free VR Fractal Visualizer & Manipulation Software for Vive

Ṛta is the principle of natural order which regulates and coordinates the operation of the universe and everything within it. In the hymns of the Vedas, Ṛta is described as that which is ultimately responsible for the proper functioning of the natural, moral and sacrificial orders.

I am excited to share the next version of the FORM fractal visualizer, using a new compute shader surface calculation and rendering architecture.

⬇️ Download for SteamVR (15MB)

⬇️ Download for Oculus Rift (32MB)

As with the first FORM, this is also beta software

i’d love for anyone who has any feedback to email me: nshelton at gmail dot com

Background & Previous Work

Raymarching is an elegant way to describe and render awesome fractals and mathematical structures but full frame raymarching quite expensive for VR, limiting the complexity of the distance field and the types of lighting and effects. How crazy can we make them ? Below are some details.

I’ve made many interactive fractal shaders on many platforms and wanted to try a different approach. Here are some examples of raymarching in a fragment shader, and some optimizations.

Shadertoy KIFS (click and drag)

Enhanced Sphere Tracing, Keinert et.al 2014

iOS OpenGL Visualizer

Temporal Reprojection: This is an effective technique that reuses the previous frame’s depth values and projects this into the current frame to save a lot of the computation necessary. It fails when distance fields are temporally changing and generally suffers from ghosting artifacts on occlusions. Read more about my iOS fractal visualizer here.

HTC Vive

[FORM0]Temporal Reprojection : This was the first app I made for VR Fractals. You could get decent frame rates if you lowered the resolution of the vive display to about 60%.

None of these approaches were really fast enough to get awesome fractals at consistent high frame rates on full resolution. so I needed a more scalable approximation that didn’t depend on the resolution of the device.

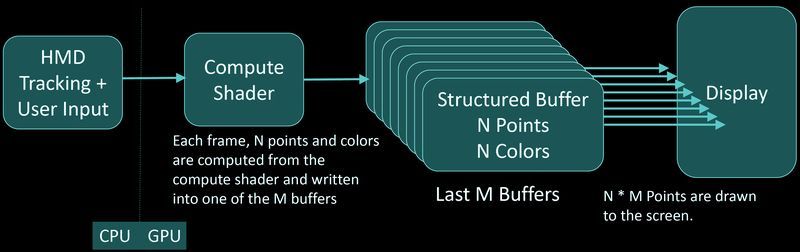

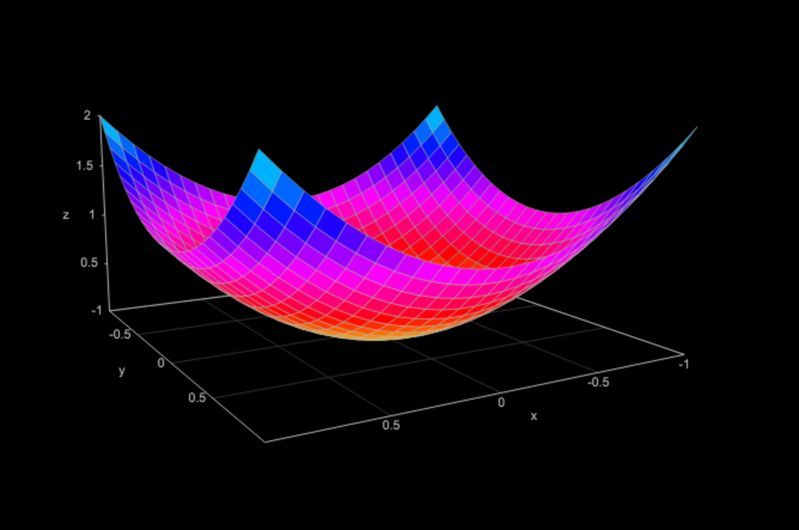

Rendering Architecture

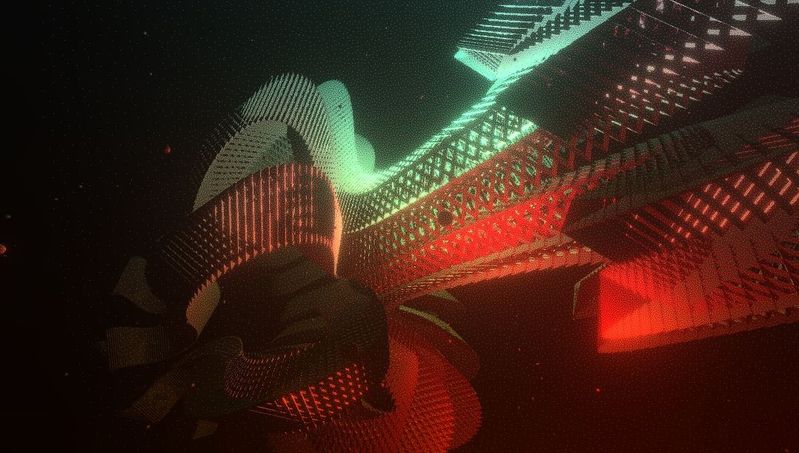

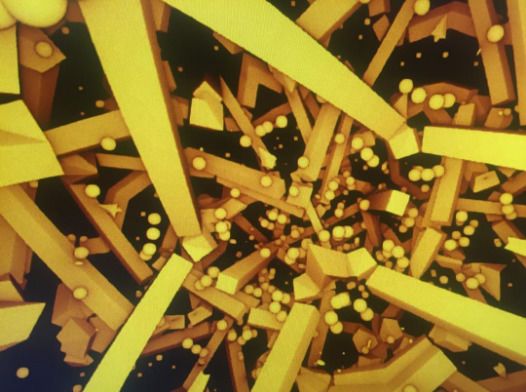

How else can you render a distance field ? We can either do point-based splat rendering, or run marching cubes on the field to extract the isosurface (more info on this approach coming soon). Since my goal here is to render fractals, points are a better bet to get the high frequency details. Also I saw this crazy talk by Alex Evans at Media Molecule about point based rendering so I thought it would be a good idea to try to render fractals with it.

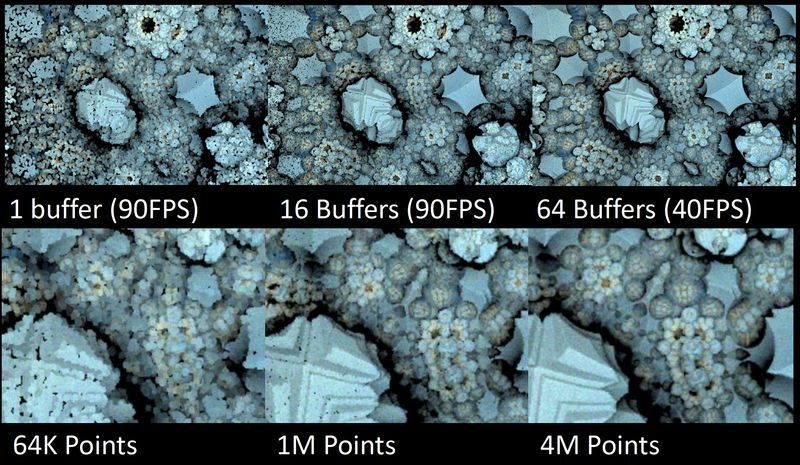

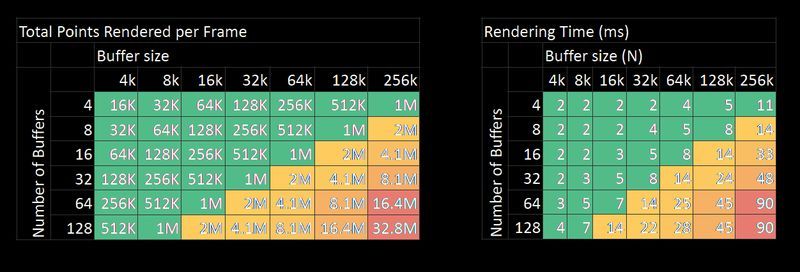

I present a new approach for rendering raymarched distance fields, which enables complex distance field visualization at 90FPS (Expecially suited for fractals). This is accomplished by progressive point cloud computation using a compute shader, separating the rendering from the compute. This technique is also highly scalable; the buffer sizes and number of buffers can be modified at runtime. This ensures the system achieves 90FPS on any platform, or can be used for HD offline renders (100ms + per frame).

Benchmarks

Benchmark system was HTC Vive + 980gtx

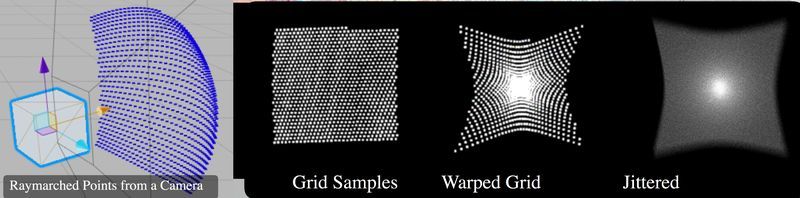

Sampling Pattern

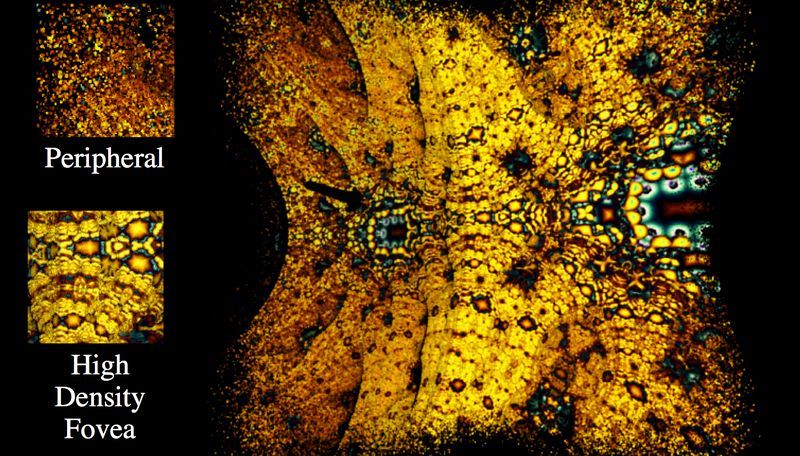

As a bit of an optimisation, I use a foveated and stochastic sampling pattern to determine which points to raymarch. This works pretty well for current VR headsets, but could obviously be improved with actual eye tracking.

User Interface Features

3D Trackball Manipulation

Same as the trackball before, but instead of doing it in the shader, it’s all accomplished by Unity hierarchy objects which makes the code super simple TrackballControl.cs

Upon letting go of either grip, unparent the point cloud back to the root.

3D Fractal Transform controls

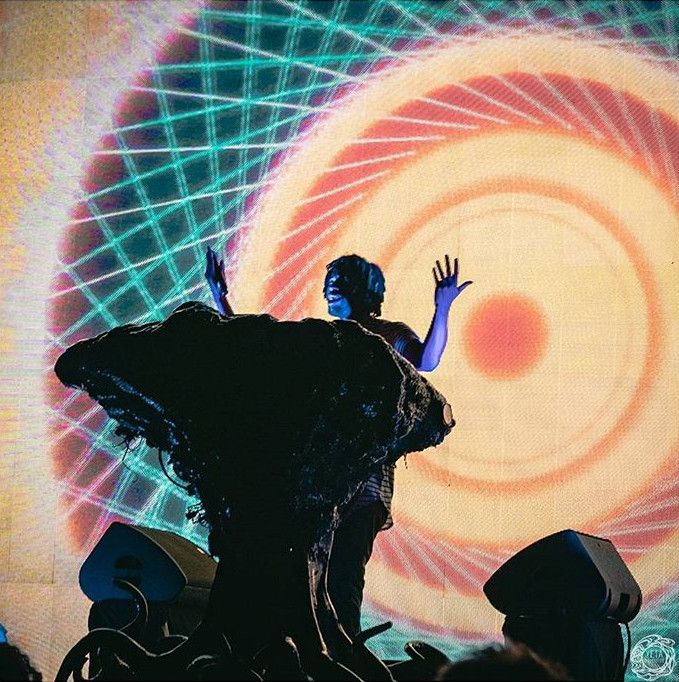

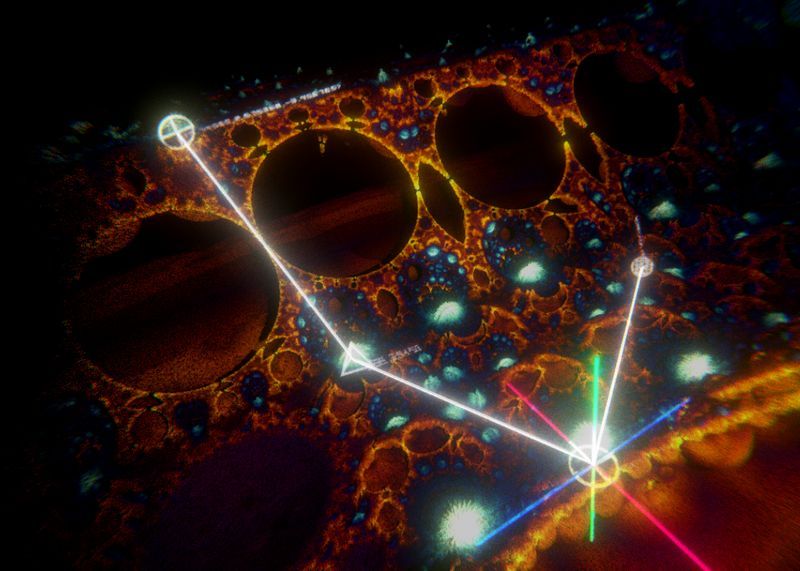

Fractals are created by interatively applying a transform to each point and comparing the result against a distance function. This defines the surface. All this can seem very abstract when writing a shader for a 2D screen, but in the context of VR these transformations can be visualized as interactive, grabbable 3D points. Interacting with these objects inspires a more intuitive understanding of fractal mechanics. At the very least it allows for hours of fun flying through infinitely customizable fractal landscapes.

Basic VR Slider UI

This is more or less the SteamVR Interaction System Linear Drive

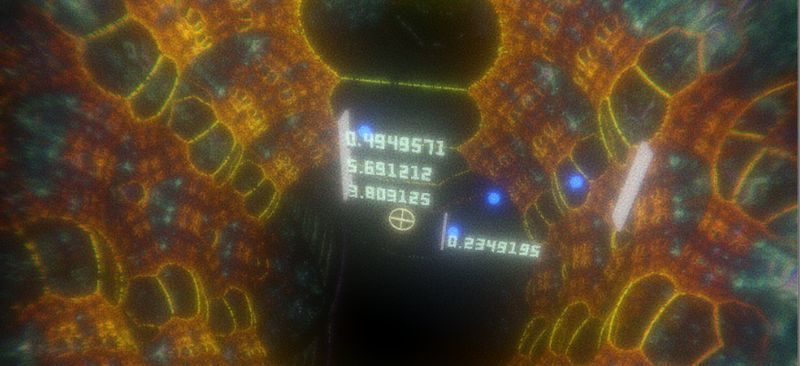

Where I have two menus :

Rendering controls the Cosine Gradient parameters

Raymarch controls termination criteria, some raymarching parameters, stepping ratio, etc.

These are in groups of 4 floats that are uploaded to the compute shader as float4

Also comes with a handy grabber handle to move menus around.

Screen Compute buffer Interface

Offers basic control over the amount of compute vs rendering each frame.

PointSize: controls the point sprite size. This slightly affects rendering time, and allows for filling gaps.

Points number of points per buffer (in thousands)

Buffers number of buffers to render each frame

Instructions

- Vive Only Currently

- Run SteamVR

- Download Binary (15MB)

- Unzip, Run the app, hit space bar to cycle through presets.

Controls

- For more advanced options look at the left controller and there are 5 menus / UI that can be toggled on and off. use the Z key to toggle this UI

- Use the thumb pads on the controller to fly. Speed is proportional to the squared distance from your thumb to the center of the pad.

- The flight direction is the actual world space vector from the center of the pad to the thumb.