Feb 2022

AR NFT Viewer

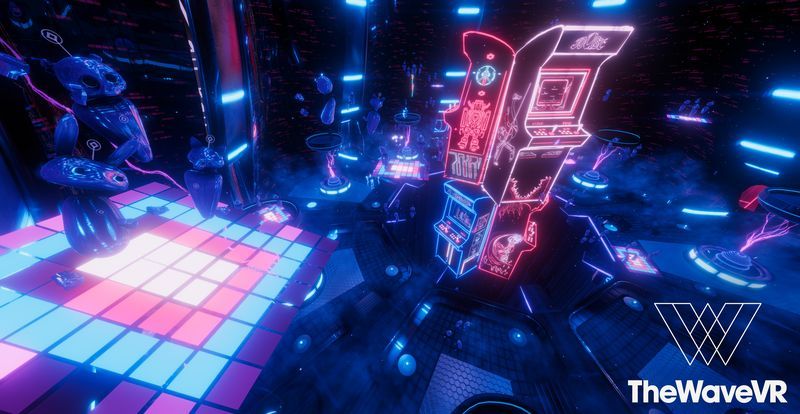

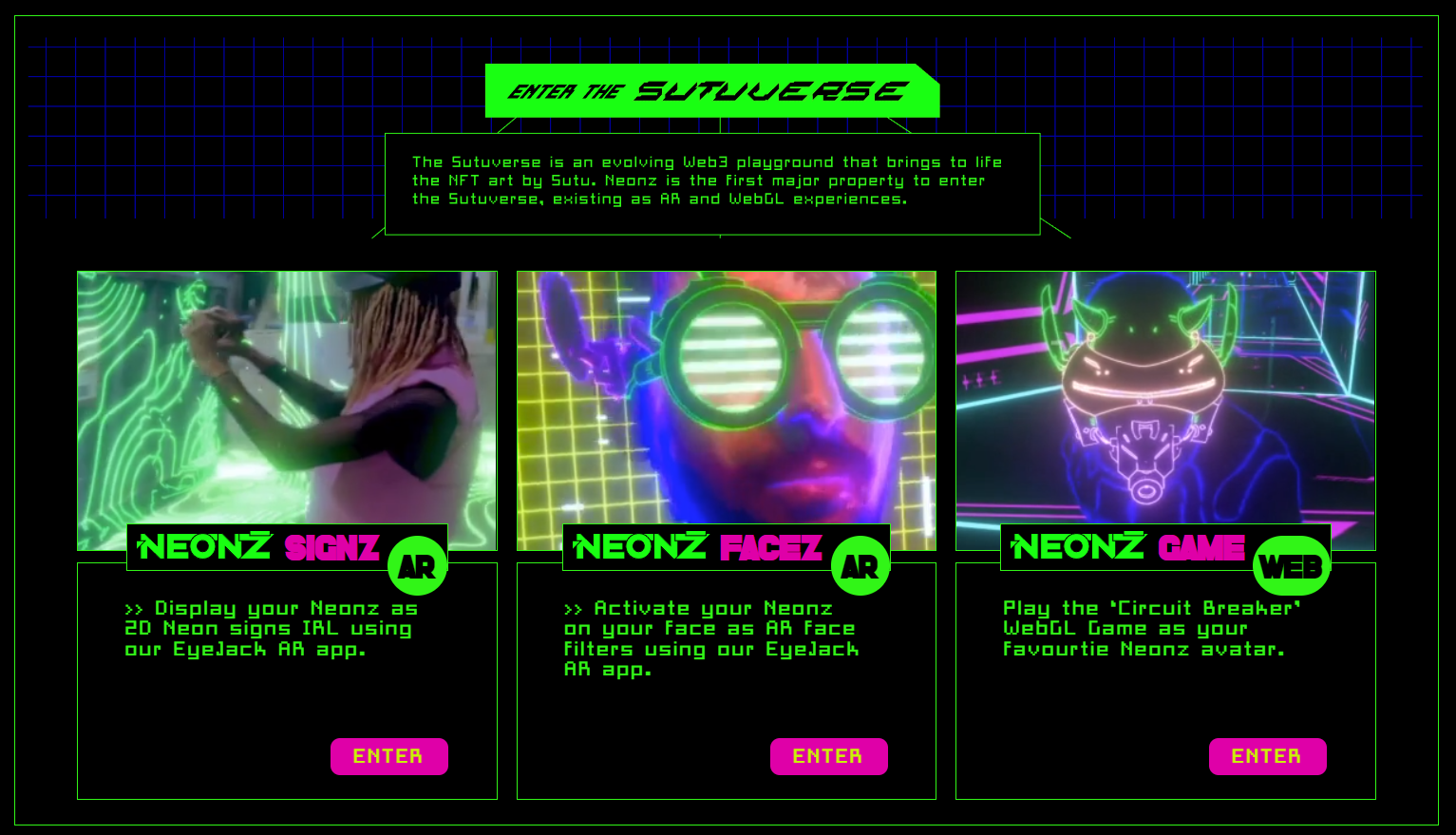

In 2021, Sutu launched one of the first generative NFT series on the tezos blockshain, a 10,000 profile pic series called Neonz. I think this is one of the few great examples that demonstrates how to use web3 tech across platforms, while also being really cool art in its own right.

This was deveoped by the folks at Eyejack, I got on board to help them with some AR rendering effects for iOS and Android.

I worked on a couple of components of the SUTUVERSE

-

Face filter - https://www.neonz.xyz/neonz-facez

-

NFT ART Viewer - https://www.neonz.xyz/neonz-signz

AR Lighting

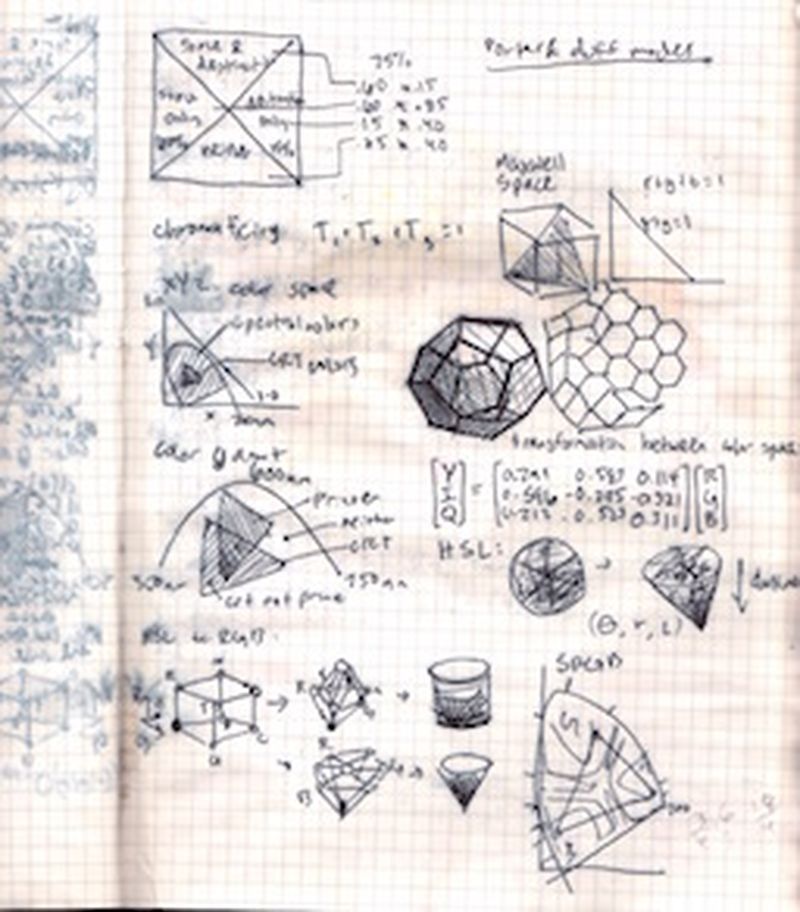

The new ARKit stuff has some awesome features for spatial perception - you can use a realtime 3D scanned mesh to composite new types of lighting into a scene. On Android, you can get an estimated depth map, and compute lighting that way as well:

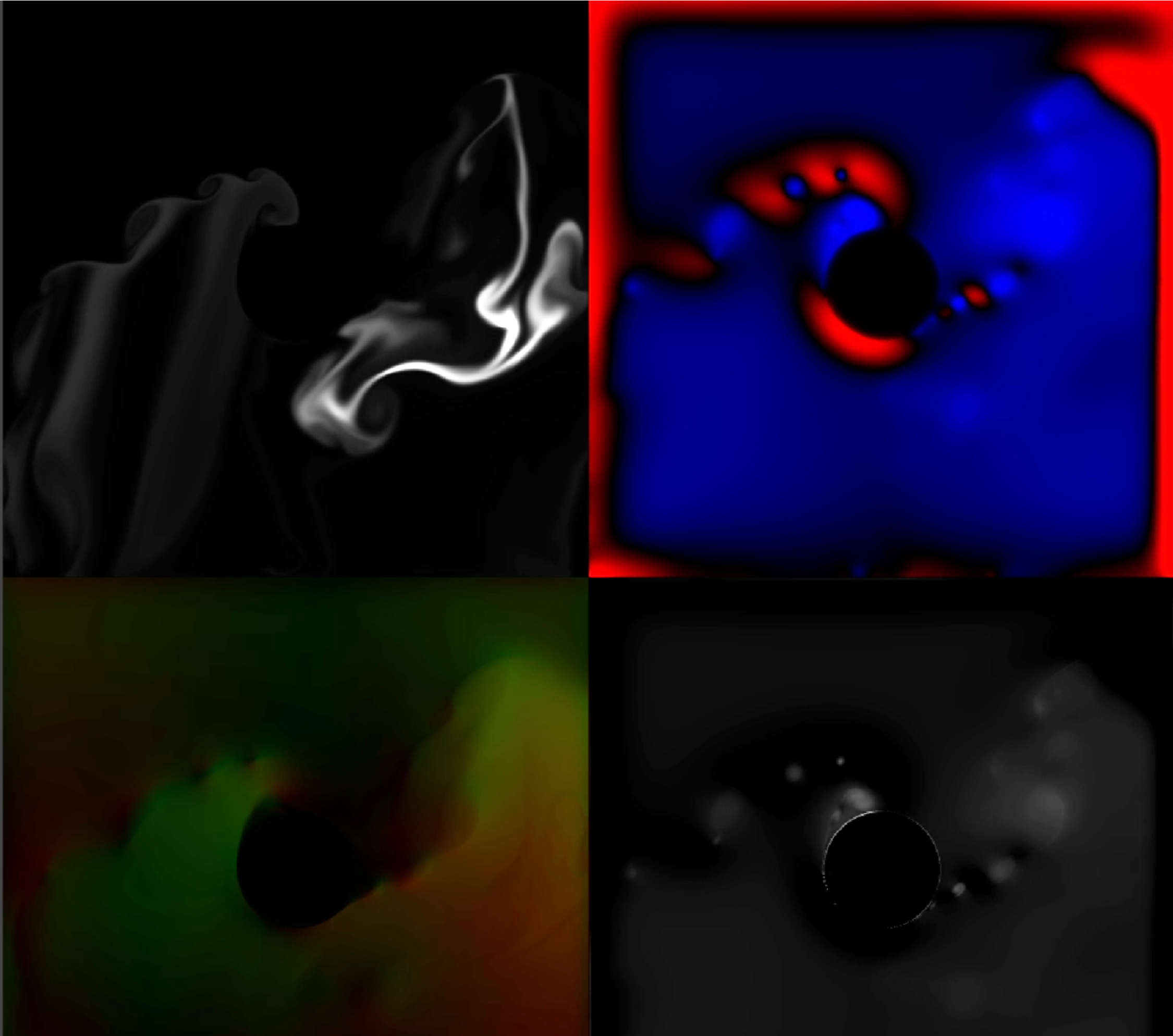

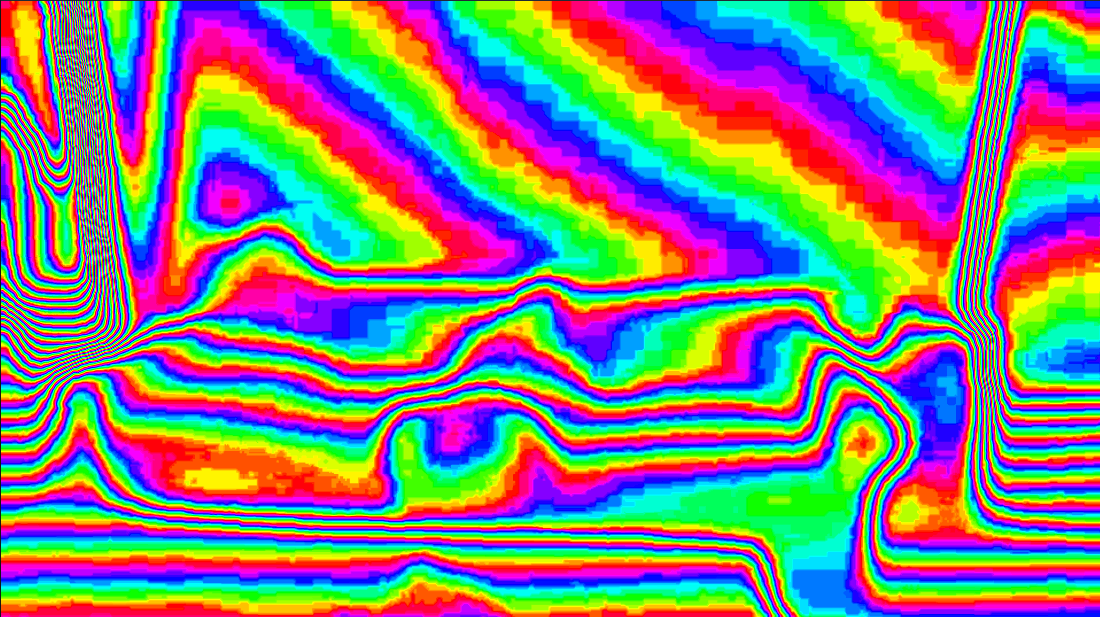

Color and Depth

The depth is not great quality, I think because it does it at a very low res, and then just interpolates. It somehow is both too smooth and too blocky at the same time, which is impressive. I think this was set to the “Fastest” quality so I’m not expecting anything great. (The Android results are even worse)

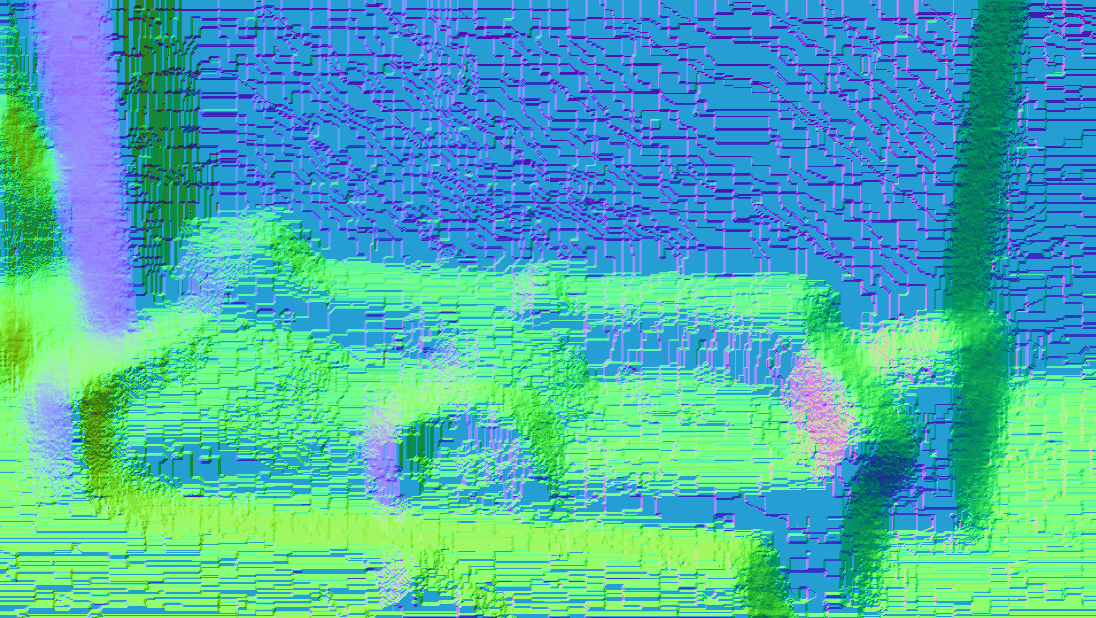

Normals

Using the depth image, you can estimate normals. There’s a lot of blocky artifacts because of the way the depth image is computed, so I do some smoothing.

Basic Lights

Directional Light, Point Light

You can then do most kind of lighting calculations you would do with any real time rendering, using the estimated surface normal and the world position.

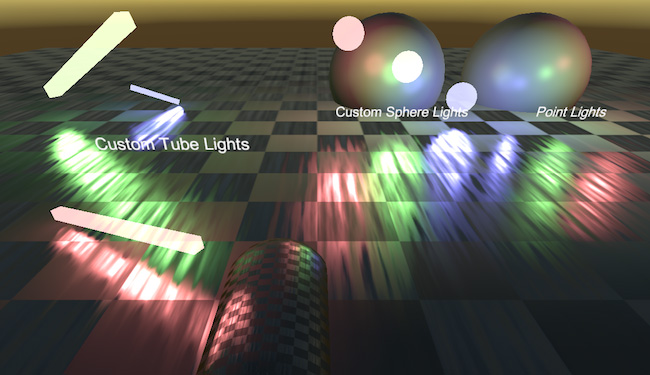

Analytic lights

Then you can compute any type of light and just add it on to the scene.

I have based this example on some unity rendering command buffer samples such as:

https://docs.unity3d.com/2018.3/Documentation/Manual/GraphicsCommandBuffers.html

iOS compatibility

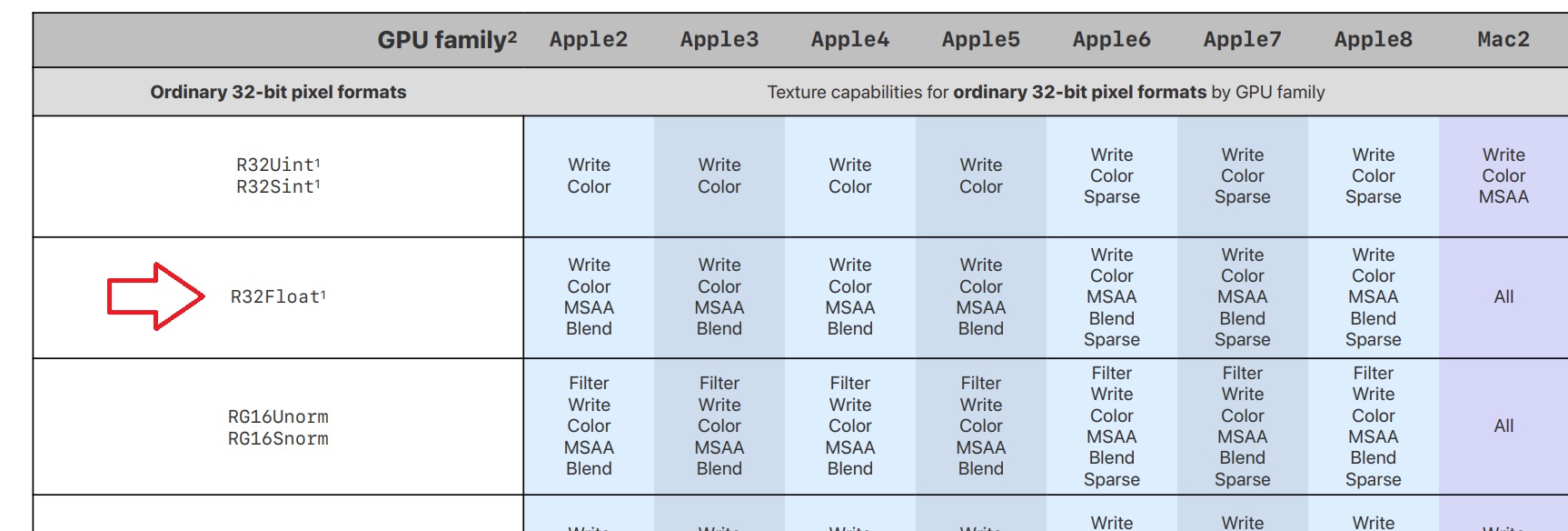

R32 textures don’t support hardware filtering on Metal???

https://developer.apple.com/metal/Metal-Feature-Set-Tables.pdf

The Depth buffer comes in from ARFoundation as a single channel, 32 bit float. For some reason, in metal, the GPU does not support TEXTURE FILTERING on R32 textures ? I have no idea why so I just blit this into a R16 texture, which does support filtering. Come to think of it, that may be causeing some of the blocky artifacts above ..

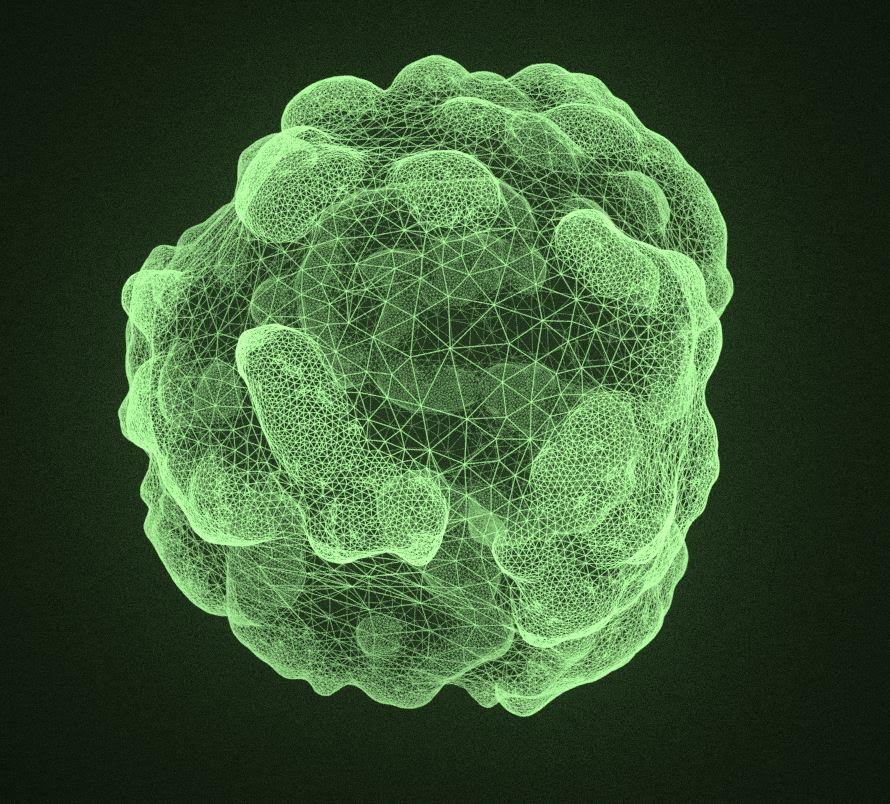

Environment effects

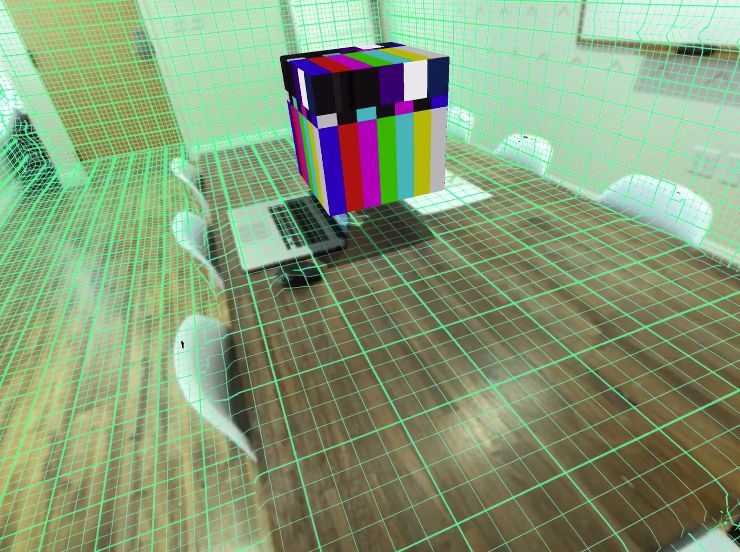

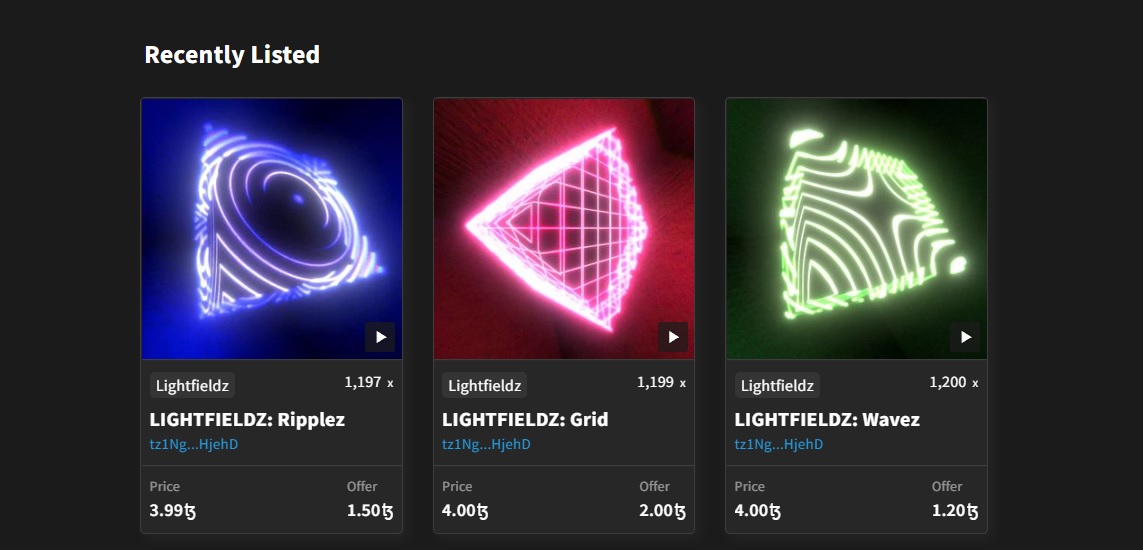

I also created some fun effects for the experience which will add MORE NEON into the world. On Android, this uses the depth buffer, on iOS with Lidar it uses the room scan,a nd on other iOS devices it uses “detected planes” (not ideal)

And wouldn’t you know, these are also released as some NFTs that unlock the effect in the app. They also unlock secret pathways in the 3D platformer game.

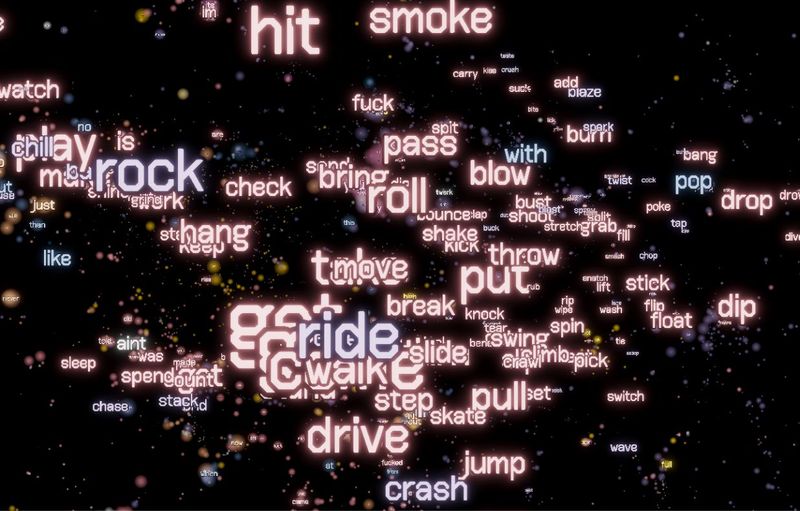

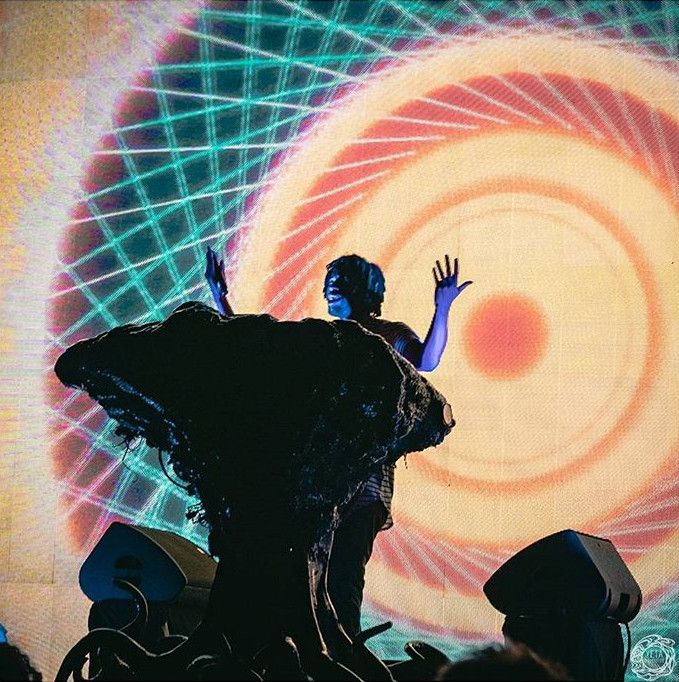

AR Face Filter

ARKit face tracking vs iOS

Face Tracking

Forehead Extrapolation

Some of the tattoos extend on the forehead, and neither ARKit or ARCore track a mesh on the forehead. So I had to just extend a procedural spline up and over the forehead. This also meant the head had to be re-UV’d to accomodate this.

Human Segmentation

I used a library called ARKitStreamer (https://github.com/asus4/ARKitStreamer) which streams the AR data live over NDI to the unity editor. This project would have taken so much longer if this weren’t availble so huge shout out to Koki Ibukuro (https://github.com/asus4) !!! <3 It’s really a shame that Unity doesn’t support this natively, I have no idea why developing AR in unity is so hard…

Human segmentation is a mask that ARFoundation suplies, which can mask out the background grid.